This project fulfilled my capstone requirement during my senior year at Northeastern University.

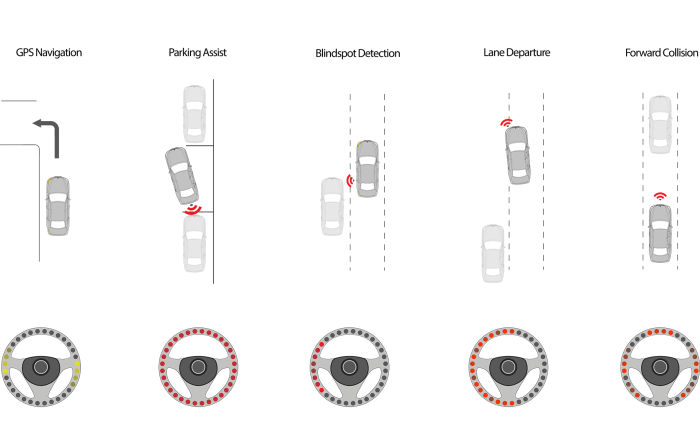

The challenge my group set out to solve was: Can we create a feedback system for drivers that is better than standard audio/visual notifications? Modern cars either come equipped with, or can be fitted with, a number of sensing modalities such as GPS, blind spot detection, or lane departure detection, and all this information has to be conveyed to users in an intuitive and unobtrusive way.  During our initial research we found studies that showed audio/visual notifications typically produce a slower response than tactile feedback. In the case of automobile collisions even fractions of a second can be critical. Based on these findings, we made our it our goal to improve driver reaction time in potentially life-threatening scenarios by creating a novel haptic feedback system. What we produced was a multi-signal vibrational steering wheel cover that is able to transmit intuitive and unique patterns depending on the sensors it is connected to.

During our initial research we found studies that showed audio/visual notifications typically produce a slower response than tactile feedback. In the case of automobile collisions even fractions of a second can be critical. Based on these findings, we made our it our goal to improve driver reaction time in potentially life-threatening scenarios by creating a novel haptic feedback system. What we produced was a multi-signal vibrational steering wheel cover that is able to transmit intuitive and unique patterns depending on the sensors it is connected to.

The wheel was designed such that it could be retrofit onto existing steering wheels and interact with third-party sensors. This meant the design needed to be sleek, unobtrusive, and contribute minimally to the overall steering wheel dimensions.

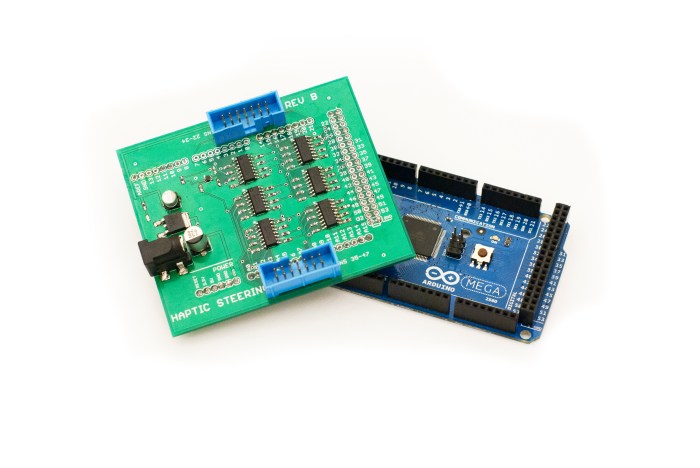

Underneath the top cosmetic layer is a a motor harness comprised of the vibration motors, plastic ribs for spreading vibration in a region, a foam substrate for preventing “vibration spillover”, and a ribbon cable for supplying power. Miniature eccentric mass motors were used to generate the haptic signal. Motor actuation was managed and powered by a custom designed shield, and controlled by an Arduino. The design used pulse width modulation along with a series of transistor arrays to control the vibration amplitude

Miniature eccentric mass motors were used to generate the haptic signal. Motor actuation was managed and powered by a custom designed shield, and controlled by an Arduino. The design used pulse width modulation along with a series of transistor arrays to control the vibration amplitude

We created our own test simulation and had our student peers and faculty test our steering wheel. Testing showed that users were able to clearly differentiate between signal types. In the reaction time portion of the test, users showed a 10% median improvement in reaction time compared to a visual feedback.