This work was completed as part my masters thesis requirements, during the late spring through summer of 2018. For more detail, please see the associated thesis document here or the associated GitHub repository here.

Imitation learning is a technique that aims to learn a policy from a collection of state-action pairs as demonstrated by an expert (human, optimal controller, path-planner, etc.). One of the major challenges of early imitation learning algorithms, like behavior cloning, is that labeled states are drawn from the expert’s state distribution during training time, however at test time the learner is expected to mimic actions drawn from its own induced state distribution. This has been shown both in theory and in practice to lead to poorly performing agents, even when trained on large datasets.

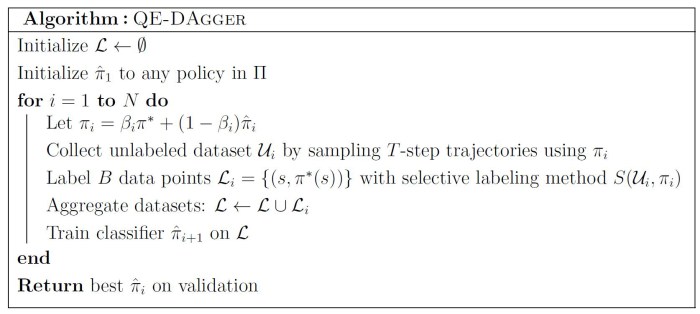

To address the train/test distribution difference, the Dataset Aggregation (DAgger) algorithm was proposed by Ross et al. in 2011. Their approach demonstrated strong no-regret guarantees, taking advantage of an interactive demonstrated that could be queried at any time by the learner. While this approach and it’s derivatives are still state-of-the-art, there is a large burden imposed on the expert as it is expected to label every state, in every trajectory, for every policy, during the learning process. For expensive or complex experts this can be extremely impractical.

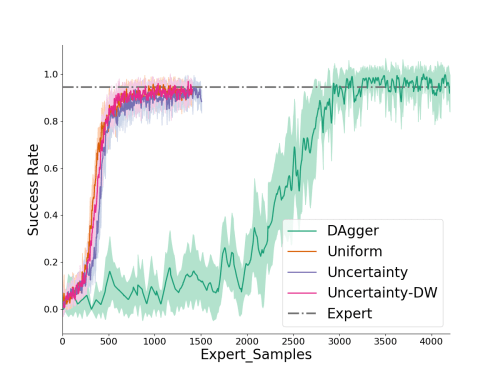

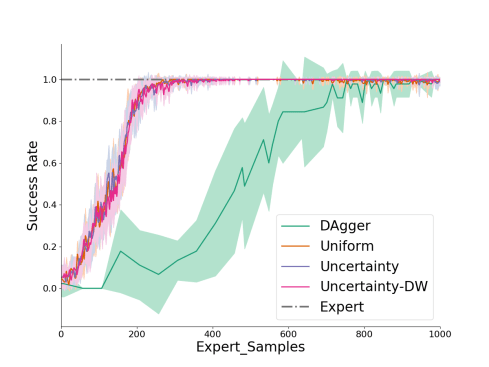

I proposed two methods for reducing the number of queries posed to the expert while aiming to at least match the competency of the traditional DAgger algorithm: increasing the number of policy updates with respect to expert queries, and applying active learning strategies from supervised learning to imitation learning. These modifications to the DAgger approach became the Query-Efficient Dataset Aggregation, or QE-DAgger, algorithm.

Using QE-DAgger, a significant reduction in the number of expert samples required to achieve competence was observed on multiple simulation environments. These included simpler game-playing tasks like Lunar Lander and Space Invaders, to more complex and practical manipulation tasks like the Fetch Robot environments by OpenAI.